7 Model Monitoring Mistakes African Lenders Are Making (and How to Fix Them)

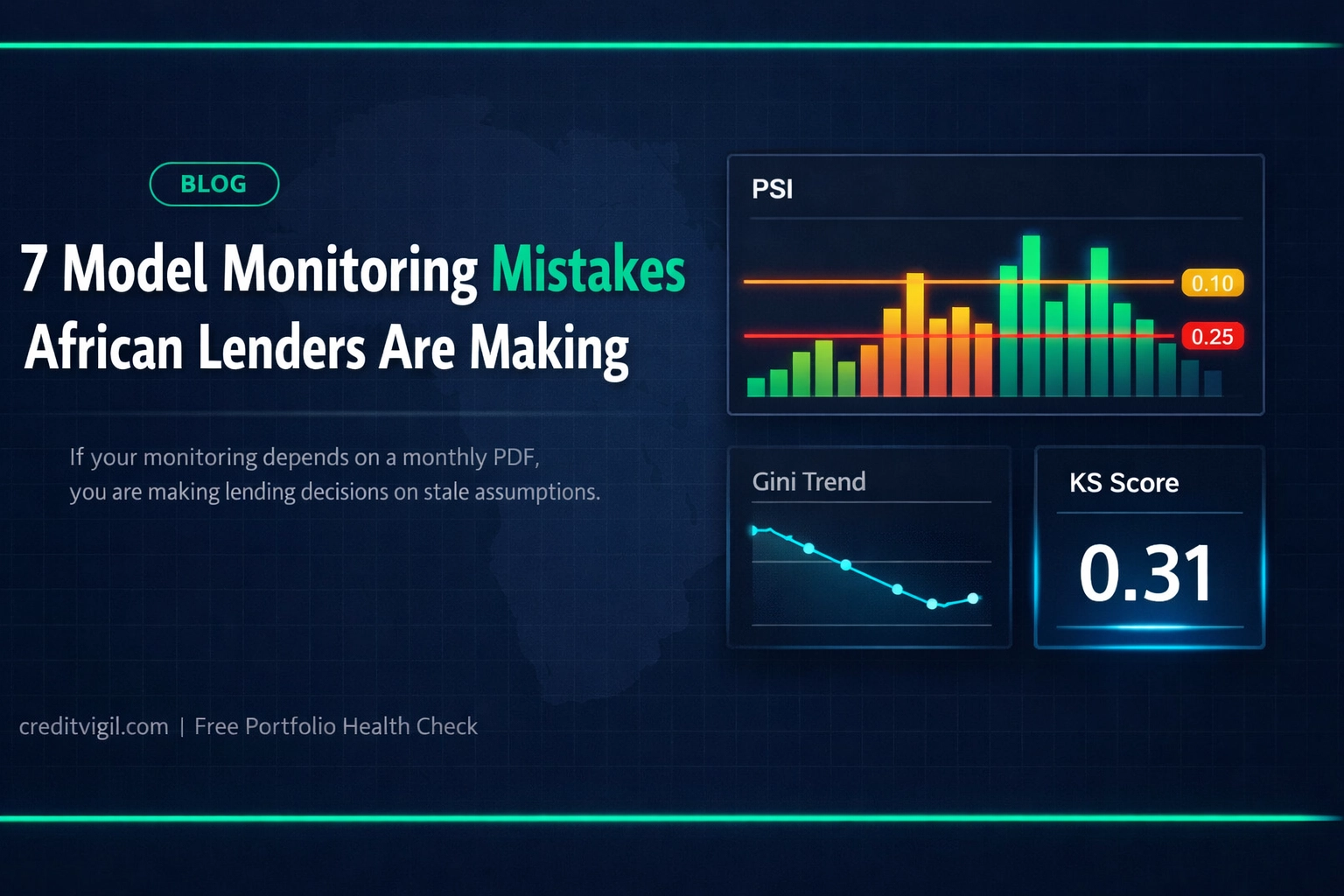

In Nigeria and Kenya, borrower behaviour can shift quickly with pricing changes, channel growth, and macro moves. By the time losses show up in 30+ DPD, your scorecard has already been wrong for weeks. This shows up first when Population Stability Index (PSI), Gini, and the KS statistic are only reviewed after the fact. That gap is hard to defend in front of a risk committee, or under governance expectations influenced by the CBN, CBK, and SARB.

1. You're Using Your Model Vendor's Monitoring Tools

When the same party builds the scorecard and controls the monitoring narrative, you have a structural conflict of interest. The risk is not that vendors act in bad faith, it is that incentives and visibility are misaligned with your portfolio outcomes. For regulated lending, independence also makes board and audit conversations simpler because you can show objective evidence of drift, actions taken, and timelines. This matters in environments where governance is tightening under bodies like the CBN, CBK, and SARB.

The fix: Use vendor-neutral monitoring that works across models and teams, with a clear audit trail and consistent definitions for PSI, Gini, and KS statistic.

2. You're Still Using Excel for Model Monitoring

Spreadsheet checks are slow, brittle, and easy to misspecify when definitions change (booked vs approved, good/bad windows, write-off timing). By the time someone exports last month's data, your scorecard has already processed thousands of new applications under a different population. Excel also struggles to keep an audit trail, which matters when internal governance meets CBN, CBK, or SARB expectations. If monitoring cannot run unattended, it will fail exactly when the portfolio is moving fastest.

The fix: Automate monitoring so PSI, Gini, and KS statistic updates run on every batch (or daily at minimum), with clear definitions and versioned outputs.

3. You're Ignoring Population Stability Index

Population Stability Index is your early warning signal that the applicants hitting production no longer resemble the training data. After the first mention, call it PSI because it should be a standard part of every monitoring pack. When PSI rises above ~0.25 on key drivers, you are scoring out-of-sample even if headline delinquency has not moved yet. In Nigeria and Kenya, channel mix shifts and new repayment rails can push PSI up in days, not quarters.

The fix: Track PSI per variable and for the total score distribution, set thresholds at 0.10 (investigate) and 0.25 (urgent), and tie each breach to a documented action (data check, segmentation, recalibration, retrain).

4. You're Detecting Model Drift Months Too Late

Quarterly performance reviews tell you what happened, not what is happening. Gini and the KS statistic can decay long before you see a step-change in 30+ DPD, especially when origination volumes are growing fast. In practice, a model can look "fine" on a lagging loss view while approval-rate mix and score distributions have already shifted. If you only review drift on a fixed calendar, you will miss fast, local shocks.

The fix: Track Gini and KS statistic on a rolling window with control limits, and alert the same day the metric moves outside tolerance.

5. You're Only Monitoring Originations

A scorecard can look stable at origination while the portfolio deteriorates post-disbursement. You see it first in roll rate migration, especially where the 0 to 30+ DPD step-up accelerates, or where cures slow down. Vintage curves then confirm whether a specific booking month is breaking away from baseline, often tied to a policy tweak, channel expansion, or collections capacity. If you do not monitor downstream, you will diagnose the problem after provisioning has already moved.

The fix: Monitor the full lifecycle: originations plus roll rate, vintage curves, and delinquency segmentation by product and channel.

6. You're Checking Dashboards Instead of Getting Alerts

Dashboards are useful, but they are not a control system. If someone must remember to log in, filter, and interpret charts, breaches will be found late and teams start explaining away the breach instead of acting on it. In most African credit teams, WhatsApp is the operational channel, so alerts belong there with clear thresholds and context. The point is not noise, it is fast, defensible escalation.

The fix: Send targeted WhatsApp alerts for threshold breaches (PSI, Gini, KS statistic, approval-rate swings), and include the segment, time window, and recommended next check.

7. You're Using Tools Built for Western Markets

Monitoring assumptions that work in slow-moving, bureau-heavy markets break in high-velocity African portfolios. You often have rapid onboarding channels, thinner credit files, shifting repayment rails, and policy changes that change the applicant pool overnight. Regulatory expectations also vary by market, and internal documentation needs to be ready for questions from bodies such as the CBN, CBK, and SARB. If your tooling assumes perfect data and stable populations, it will either spam false positives or miss real drift.

The fix: Use monitoring designed for African data realities, with robust data quality checks, segmentation, and governance outputs that risk committees can use.

What Real-Time Monitoring Actually Looks Like

Real-time monitoring is a loop: measure, compare to baseline, alert, and document the response. As a best practice, calculate PSI on the score distribution and top drivers daily or per batch, depending on volume and latency. Track Gini and KS statistic on rolling windows, and monitor roll rate and vintage curves on a schedule aligned to repayment behaviour. The output is not just charts, it is a record of when a breach happened, what changed, and what was done next.

Conclusion

If you take only one action, make your monitoring earlier than your losses. PSI, Gini, KS statistic, roll rate, and vintage curves are not "analytics", they are governance controls that help you price, approve, and manage risk with current information. In markets like Nigeria and Kenya, the time between drift and portfolio impact is short, and boards increasingly expect evidence that you saw and acted. Start by calculating PSI on your top five score drivers for the last 90 days — if any feature exceeds 0.25, your model is scoring a population it wasn't built for.

CreditVigil provides independent, real-time monitoring of credit scoring models for Africa's lenders. Request a free portfolio health check.